Data quality and data relevance are key

If you have ever taken an introductory computer science class, you are likely familiar with the phrase “garbage in, garbage out.” These four words perfectly express the importance of managing data in the digital age. The soundness of data is as important as the soundness of your model’s underlying logic, development methodology, and application.

While measurable characteristics such as cleanliness and quality of data are important, the qualitative characteristics of data should not be underestimated. They can be viewed as its real-world application that helps you understand how key data elements represent the business. As such, data relevance should be considered throughout the modeling life cycle.

Best practices

A good data management initiative starts with five best practices.

- Establish data governance standards to ensure a comprehensive, consistent approach.

Like all governance standards, data governance standards establish a comprehensive and consistent approach to managing data across your enterprise—determining how data is treated throughout the modeling process. Your governance standards should define the criteria for acceptable data quality based on industry best practices and regulatory expectations.

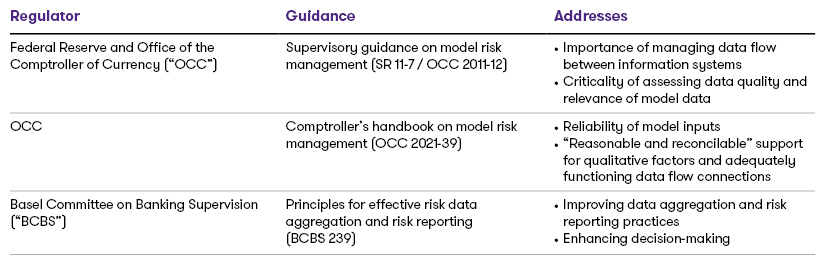

Fortunately, regulatory expectations are readily discerned. Various regulatory bodies have issued guidance related to data management.

This mix of regulatory regimes puts particular emphasis on process, documentation, and reporting. They speak to every aspect of data use in modeling, including data creation, storage, and usage.

- Use lineage analysis to understand data sources, flow, and interaction.

Financial institutions often utilize more than one data system. For example, one system may record transactions for the core banking system, while another stores the databases used to generate management reports. Institutions also purchase access to external data sources to obtain macroeconomic forecasts, investment data, or other similar information.

Data systems and lineage analysis provide a comprehensive understanding of these various data sources and the effects that system updates and issues have on a model. Data flow diagrams visualize data lineage, documenting the inflows, and outflows of each system.

Regulations reinforce best practice here. SR 11-7 from the Federal Reserve urges institutions to invest in systems that adequately support data integrity.

- Employ change management strategies to keep stakeholders informed.

Change management— the documentation, governance, tracking, and communication of changes that impact a model’s data—is critical for keeping key stakeholders informed. This can encompass system-wide changes as well as more granular changes to specific records in a dataset—for example, the data added when a new product launches. Users impacted by the change can update their queries accordingly.

Digital audit trails can identify changes and subsequently confirm that the changes applied were authorized.

- Proactively manage issues.

As organizations strive to improve, stakeholders periodically identify data management issues across the three lines of defense. When this happens, best practice is to promptly develop an action plan and monitor its progress until the issue is fully resolved. By preventing the recurrence of issues through root cause analysis, this process helps organizations avoid repeating the same mistakes.

Ultimately, data issue management serves as a control which helps achieve accurate management and financial reporting.

- Consider all relevant dimensions of data quality.

The UK Government’s Data Quality Hub has identified six dimensions which can define data quality: Accuracy, Completeness, Consistency, Uniqueness, Timeliness and Validity. By establishing metrics that align to these dimensions, organizations can enable quick and objective reactions to current data performance. When combined with input from management, this can promote more effective decision-making—addressing issues or seizing enhancement opportunities.

From best practices to standard practice

Data management is a core enterprise-wide component of any internal control framework. It takes the form of periodic data quality monitoring, model validation, and audits.

Specifically, a data quality monitoring framework combines data governance standards and the six dimensions of data quality. During each periodic model run, data quality monitoring processes can flag data issues by comparing data quality metrics to thresholds and escalation triggers.

While model validation encompasses a variety of tasks, the data component focuses on evaluating development data—the data the model is built on—and the input data which periodically flows into the model. Development data can be further segmented into training, validation, and test data.

SR 11-7 calls for an in-depth review of developmental data and its applicability to the institution’s business or specific portfolio, with special attention paid to process verification of input data. This step asks: “Do model components function as intended?” Discerning this typically means reviewing data flow diagrams and data quality monitoring reports. It also means performing tests to reconcile the data stored in the model to the source data. Similar exercises can be performed by the internal audit function to verify that data management controls are designed and operating effectively.

Furthermore, many institutions have established enterprise-wide data governance programs to further engrain the importance of data management into the culture. These programs often establish data stewards to lead data management functions for their business units and oversee data management for the models owned by the business unit.

The human element

In a world of standardization and automation, it is easy to lose sight of the big picture. Technology can assist in streamlining data management through enhanced data quality monitoring processes. Metrics for key data elements can be generated at the click of a button.

However, the role of human judgment has never been more important. Data relevance can only be evaluated through the lens of management expertise. Computers can free personnel to focus on analyzing data applicability to the business and evaluating the best course of action to address threshold breaches.

Ultimately, making well thought out decisions for the data being used is the cornerstone of a successful data strategy.

Our banking featured industry insights

No Results Found. Please search again using different keywords and/or filters.